By Rob Mitchum // February 16, 2016

The ATLAS experiment at CERN is one of the largest scientific projects in history, with thousands of scientists from around the world working together to analyze the torrents of data flowing from its detectors. From CERN’s Large Hadron Collider in Switzerland, data travels to 38 different countries, where physicists from 174 institutions apply their own analysis techniques to make new discoveries about the laws and structure of the universe. It’s an ambitious effort, but like any large, spread out collaboration, it carries with it organizational challenges that increase exponentially in tandem with the amount of data collected, processed and shared.

Now, scientists with the Computation Institute and the ATLAS Midwest Tier 2 Center have created a new platform to help unify and monitor this busy global hive of research. Working with open source software, the cloud computing testbed of CloudLab, and a team of experts from CERN, the CI’s Ilija Vukotic designed a new data analytics platform for ATLAS. From anywhere in the world, team members can directly access a website (or the platform itself, programmatically via an API) that lets them analyze and visualize the processing of petabytes of data circulating the globe as the project unfolds.

In aggregate, the ATLAS experiment and collaboration can be thought of as a single project, organized around the ATLAS detector at the LHC. But in practice, it’s really a well-aligned constellation of smaller projects and teams working in concert, each using different methods and approaches to pursue a wide range of physics goals including detailed measurements of the Higgs boson, the search for supersymmetry, and dozens of other questions at the forefront of particle physics research. Each analysis group requires its own collection of data sets, typically filtered using bespoke analysis algorithms developed by individuals from each physics team.

Over the past decade, a set of workload, data management, data transfer and grid middleware services have been developed for ATLAS, providing the project with a comprehensive system for reconstruction, simulation and analysis of proton collisions in the detector. These services produce prodigious amounts of metadata which mark progress, operational performance, and group activity — metadata that can be mined to ensure physics teams get the data they need in a timely fashion and can efficiently analyze them once in place.

However, these metadata were difficult to share between computing subsystems and were not in a form suitable for processing by modern data analytics frameworks. Determining who possessed the data you need and learning the custom tools developed around that data often took months, Vukotic said, a “Tower of Babel” situation that slows collaboration within the ATLAS ecosystem.

But today, these kinds of distributed “big data” problems are no longer unique to data-intensive sciences such as physics and astronomy. As similar issues appeared in commercial and government sectors over the past decade, open source developers created suites of new analytics tools to address them. Vukotic set out to apply some of these tools to untangle the growing data knots facing ATLAS researchers.

The first challenge was to put all of the distributed services metadata into one, centralized location, rather than partitioned among the various subsystems and servers. To do so, he used the cluster-based distributed storage software Hadoop, as well as other open source and custom made utilities to import data from disparate databases and data streams. He also deployed Elasticsearch to make indexing and searching the billions of imported documents easier and faster.

Second, the analytics platform needed to include metadata from the fabrics that ATLAS computing services rely upon. For example, understanding temporal network characteristics between data centers shared by multiple experiments and science domains is fundamental to achieving efficient distributed high-throughput computation.

Therefore, perfSONAR measurements from globally distributed “Science DMZs” (as aggregated by the Open Science Grid’s networking team led by Dr. Shawn McKee at the University of Michigan) were slurped into the platform’s data store for more agile analysis with metadata from other subsystems. Recently, link traffic and flow measurements recorded by ESnet’s LHCONE engineers have also been incorporated, further bringing into relief the variable features of the distributed computing landscape.

Third, flexible and robust infrastructure to host the analytics platform was needed. To quickly ramp up the project Vukotic turned to CloudLab, a National Science Foundation funded “build-your-own-cloud” project of the University of Utah, Clemson University, the University of Wisconsin Madison, the University of Massachusetts Amherst, Raytheon BBN Technologies, and US Ignite. Using the Clemson site, and with the assistance of Lincoln Bryant, a “dev ops” systems engineer at the ATLAS Midwest Tier 2 Center (a high-throughput computing facility partially housed in the sub-basement of the Enrico Fermi Institute’s historic accelerator building and plugged into the global WLCG computing grid), Vukotic quickly created a six-node analytics cluster to test out the new system.

“CloudLab is designed to enable research on the fundamentals of cloud architectures, all the way down to the bare metal of servers and switches,” said CloudLab principal investigator Robert Ricci, research assistant professor at the University of Utah and one of the developers of its core technology, Emulab. “We’re happy to be able to support this project at the Computation Institute and CERN as it seeks to improve the fundamentals of analytics.”

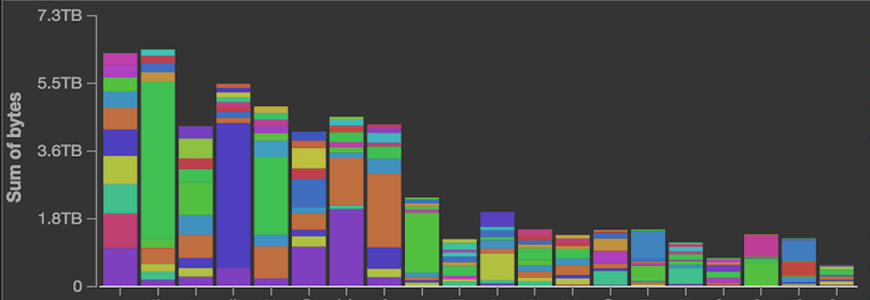

With the new architecture in place, the researchers layered Elastic’s Kibana on top, a browser-based graphical user interface for exploring and visualizing data. With it, one can quickly create custom dashboards revealing new patterns of ATLAS analysis activity; for example, observing which teams in what countries are accessing which kinds of data at any particular moment. Within minutes, ATLAS scientists can gain access to information that would have previously taken weeks, allowing the central operations team at CERN to watch for computing inefficiencies and ask deeper questions about overall resource consumption and optimization, including network bandwidth.

“It gives a lot of transparency to the system, and will allow us to make significant gains in computational and data access efficiency,” Vukotic said. “It will also help us modernize high energy physics computing at a time when we are asked to do more with less funding.”

The ATLAS analytics platform, as built on CloudLab and already in production use by ATLAS distributed computing teams, will soon transition to CERN systems as a component of a general data analytics service for the four major LHC experiments. To speed up complex calculations, Vukotic plans to add a Spark engine to the platform; to boost accessibility for the ATLAS collaboration, plans are to use Jupyternotebooks with “connectors” for ROOT-based analytics, a data analysis framework commonly used by physicists. Thus far, the response from ATLAS and CERN scientists has been very positive.

“The ATLAS analytics platform has made it trivial to discern new patterns of activity among the thousand-plus active ATLAS analyzers at any given time, such that we can now more easily gauge the impact of changes to our computing model and data formats” said Dr. Federica Legger from the Ludwig Maximillian University of Munich who coordinates distributed analysis for the ATLAS collaboration.

“Efficient use of our resources has always been imperative in ATLAS Distributed Computing,” said Mario Lassnig, Staff Engineer, Experimental Physics Department, CERN. “For example, deciding where to run our computing jobs, which network links we should use for data transfers, or even which data we can delete, requires a tremendous amount of expert knowledge to keep efficiency high.”

“The analytics service provided by the University of Chicago and CloudLab has made it possible to automate these decision processes,” Lassnig continued. “We retrieve a plethora of metrics; for example, the latency between data centres, or the file transfer queues, and process them for use in our machine learning algorithms. In almost real-time, we can figure out clusters of well-connected sites, dynamically adapted by current metrics, or decide to create additional copies of popular data for faster parallel processing.”